By Bill Broenkow (13 May 2016)

This account tells the story of my involvement in introducing computers to Moss Landing Marine Laboratories. There's not much logic or plan in how this came about. In those days (the early 1970s) things just happened as teaching and research evolved. Furthermore I had no formal education in computers or electronics, but in the beginning no one did.

My first experience with computers occurred as a graduate student when I modeled the pH of seawater using an iterated method that could be accomplished only by computer. At the University of Washington this would be on an IBM 7070 locked away somewhere in the only computer room on the upper campus. I wrote the program in FORTRAN on 141 punch cards, each being a single statement or numerical constant, placed them in a cubby hole and returned the next day either to find print outs or error messages. (Interestingly, today with atmospheric CO2 levels rising, processes controlling pH are a major concern.) It took many walks up the hill to get it right. That's how it was done in 1963. That was my total computer background before coming to MLML.

After arriving at MLML in the fall of 1969, I found myself to be the computer expert. My courses in chemical and physical oceanography used lots of calculations, done at UW by tables, such as Knudsen Tables to determine seawater density from salinity and temperature, then further tables to calculate pressure effects and finally the geopotential height from Nansen bottle stations and on to geostrophic currents. These required two way interpolations trig and log tables. Tedious. In the late 1960s this began to change with the use of the UW IBM, because computer compatible algebraic equations were then being developed at various marine institutions to eliminate the table work. However during my first years at MLML I taught with appendix tables in 'The Oceans' the standard marine science text of the day. And what's even more archaic, I taught the use of the slide rule.

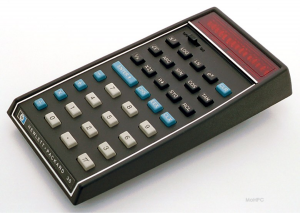

In 1972 the scientific world was revolutionized by the HP-35 calculator. This was big. And its cost of $395 did not stop more than 100,000 being sold during its first year. The first calculator in space. I still own mine. No more trig tables, no more slide rule, but still at MLML we used those density tables. Two years later the $795 HP-65 programmable calculator arrived. Now oceanographers without access to mainframes could write our own programs. I spent many wonderful hours programming salinity, density, thermometer corrections, celestial navigational calculations, a never-ending list of computer applications. I have the little magnetic cards holding these ancient programs and the calculator still runs.

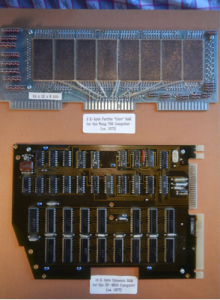

At nearly the same time a bigger revolution occurred: the first real, desktop computer, the Wang 720C Calculator the size of a suitcase. It was a calculator in name only so that the hierarchy of managers in computer science departments would not need to authorize their purchase. But it was a true computer. I cannot recall how I managed to get Director Hurley to pay the $7000, but we soon had one at Moss Landing. We established a computer lab, really only a small room with a 24/7 sign-up sheet. It had a real computer language to use and teach. Now this wasn't FORTRAN, more like machine language. The Wang 720C used an assembly programing language; some codes from the keyboard some by switches. The 2048-byte ferrite core memory held the computer 'steps' (up to 1984 of them) loaded top-down in the core memory data were loaded bottom-up and too much of one overrides the other. Today it's hard to imagine that this small system was useful.

The Wang 720C September 1973. The first computer at MLML. Capable of 1984 computing steps with an operating system consisting of a 64x32 diode ROM and a ferrite core RAM memory of 64x32x8 magnets. Input by cassette tape, single keys for the low level commands, output by Nixie tubes or IBM Selectric typewriter. The military still uses ferrite core memory in radiation hardened satellites.

This sudden burst of technology required some effort on my part to learn how computers really work. I thought I should know something about the machine language instructions that the CPU chips processed. I bought a HeathKit microprocessor trainer, wired it up, took the self taught course, wrote the required number of assembly language programs, passed the test, and realized that I really did not want to become an assembly language programmer.

This era was called the Computer Revolution for good reason. Suddenly the little folk had enormous computation ability and it didn't take long for more friendly scientific computers to come along. Ah... 1974: the HP-9820 with an algebraic programming language in RPN (reverse polish notation), paper tape output, magnetic carder reader and expensive RAM expansion modules , mathematics and peripheral control ROMs. All very exciting to me and another $7000. I had to get one of course.

In the early 70s the MLML physical oceanography group made the first synoptic survey of salinity, temperature and nutrient distributions in Monterey Bay. This work came to the attention of Bob Wrigley at NASA Ames Research Labs in Mountain View. Bob was a pioneer in the mid 70s in measuring phytoplankton concentrations in the ocean and was using his new hand held radiometers to do this. Simple, eh? Phytoplankton rich waters are green, poor waters are blue, wheat fields are amber. He wanted surface truth data in the Bay like he had done over agricultural areas and asked us to make chlorophyll measurements as he flew his radiometer. I had no idea where this new (to me) remote sensing would lead. He kindly provided me thermistors, fluorometers and strip chart recorders and supported my research assistant, Carl Schrader. We measured chlorophyll and suspended sediment concentrations, Secchi disk depths and compared ocean color to a Munsell color chart.

Until 1975 we used Nansen bottles but things changed when began using a Plessy 9400 conductivity, temperature, depth, CTD, profiler. No more hydro-casts. Data were recorded on the XYY chart recorders provided by Bob. Then we (yes, some poor graduate student) would pick off conductivity and temperature values from the graph and calculate salinity, density and dynamic height anomaly. We entered these into HP-9820 computer files to store the data for further processing. Not much later we added a Teletype printer with paper tape punch ($700).

But the big event in my computing and scientific life began in early 1977 with the HP-9825 desktop computer ($5900). This was a very mature machine: the HPL computer language, a qwerty keyboard, 16 kbytes memory, a plotter, disk drive, paper tape printer, and to do the science through data acquisition it had plug-in interface boards: 16-bit parallel, and the HP-IB interface that connected directly to the HP instrumentation. This computer was a revolution to engineers and scientists and sold over 28,000 through its 5 year life. With the 9825 we could record digital data directly from my CTD profiler using HP frequency counter, programable switch and voltmeter. The HPL language and HP-IB instrumentation were to shape my future.

The HP-9825, June 1977. This revolutionary computer launched innumerable projects at MLML. First our work with Dennis Clark's CZCS satellite surface truth experiment, later our CTD, current meter and ocean profiling instruments with John Martin's Vertex project. Ultimately our association with Dennis Clark led to MLML's continuing work (1997-present) with the Marine Optical Buoy, MOBY.

After we had worked with Bob for a year or more, he thought it would be a good idea for me to see how the experts were developing surface truth measurements for the Coastal Zone Color Scanner (CZCS) to be launched on the Nimbus 7 satellite. He paid for my October 1977 flight to Galveston, Texas to join the RV Gyre cruise headed by Dennis Clark. Dennis was a research scientist from NOAA's the World Wide Weather Center in Suitland Md who established a comprehensive program to measure spectral water-leaving radiance or simply the color of light reflected from the upper layer of the sea. These are the definitive surface truth data from which the CZCS scans would be corrected for atmospheric attenuation. He was using his brand new, state of the art spectral-radiometer. He lowered these to several depths producing radiance values at 80 wavelengths. To eliminate wave noise many scans were made at each depth. This is a data intensive process and to do this Dennis was using his brand new HP-9825!

He had a complicated program: two instrument vans, an electronics engineer and several technicians to handle the instruments. I was a complete stranger to this work and to Dennis who I had not met until the day I arrived. As a guest, I stayed out of his hair, but observed his preparations. One of his technicians was assigned to use the 9825 to acquire data from the radiometer. After two days I saw that he was having trouble programming the computer. I offered to help, explaining that he would be dependent on the way I stored data in my data base, Dennis thought this over a day or so and agreed; “OK”. As they say, the rest is history.

I had a week at the dock to learn about his instruments and needs for data processing. After three days at sea on the Gyre I was still working on the programs but at the first station (October 25, 1977) my programs brought in the data, did the averaging, applied the calibrations, and plotted the corrected radiance spectra. At that time I adapted the way we had been organizing our oceanographic data, what we now call MLDBASE, just a computer filing system to hold the radiance data and identifying (metadata) information.

I worked with Dennis for four years during the CZCS era learning about satellite oceanography from Dennis' NOAA and NASA and university colleagues. There was much to learn. One occasion was especially memorable. On a trip to the Visibility Lab at Scripps Institution of Oceanography, I met Ros Austin who was developing image processing software to display and interpret data from the CZCS satellite. He had DEC VAX computers with satellite data stored on huge disk drives. The programs Howard Gordon from University of Miami developed to remove the atmospheric haze were really complicated. Howard's work developed over several years. At Scripps that day Ros started the image processing program, we went to lunch and late in the afternoon the corrected map of 'water-leaving radiances' and computed chlorophyll concentrations appeared on a black and white display.

Between 1979 and 1996 my group participated in John Martin's highly successful VERTEX program. The National Science Foundation called John's discovery that iron is a limiting nutrient as one of the most important oceanographic discoveries of the century. During this time Dennis funded one graduate student (Jeff Nolten and later Richard Reaves) to work on the CZCS project. He kept me in a holding pattern until he could launch his Marine Optical Buoy, MOBY, project.

Before the earthquake (October 17, 1989), John Martin introduced me to David Packard (founder of Hewlett-Packard) who saw we were still using an old HP-9825. I think John suggested that I might work with the Monterey Bay Aquarium to display weather and ocean conditions at the Aquarium. Mr. Packard gave me a tour of the Aquarium and his mechanical tide predicting machine. We discussed how we could measure waves, tides and weather to display as an exhibit. As a result he provided the HP computers (HP-9826), data acquisition instruments (HP-3497), weather station, current meter and ocean buoy. Somehow along the way I asked if he could provide a second computer to share the programming load. That's how I got (and still have) an HP-9816. I picked it up at the Aquarium one day in 1985. The box was addressed to Mr. Packard who had paid list price out of his own pocket. The ocean and weather station began operation in March 1986 and we continued operating it until 1995 when the U.C. Santa Cruz REINAS project assumed its operation.

After the earthquake Dennis designed the MOBY prototype. MOBY was to be an autonomous buoy moored near Lanai, Hawaii. This first-of-its-kind system provides daily 'water-leaving' radiance measurements used to calibrate ocean color satellites whose sensors slowly degrade in time. Luckily for me, at that time I had some brilliant graduate students: Mark Yarbrough, Mike Feinholz and Richard Reaves. Mark had designed and built our new Integral CTD Rosette System. Once again I asked Dennis to carefully consider if he would task us the job of building, programming, calibrating, launching, operating and reducing data from this device. He was truly impressed with Mark's mechanical, electronic and organizational skills, Richard's programing skills with low level assembly language, and Mike's methodical calibration methods. He took us on... again.

The computer world changed dramatically over this period. At the temporary MLML campus in Salinas we used a MicroVAX II to act as a local area network server and distributed computer facility to the several portable buildings. Various labs used computer terminals to use the MicroVAX for various tasks as well as for local e-mail. During a visit with us, Dennis' Miami colleagues installed their state of the art CZCS image processing software on our MicroVAX. My student, Ed Armstrong, used this FORTRAN program called DSP for his master's thesis work and later went on to use this experience at the Jet Propulsion Laboratory.

There was no Internet yet. And during this time personal computers evolved. Everyone used their PC or Macs. When the Internet finally arrived in 1998, I was asked what name to use as our Internet URL. Without direction from above I chose mlml.sjsu.edu. During the 'Salinas Marine Lab' years I discovered a life-changing programming language, MATLAB. We rewrote all of our HPL programs and our now extensive MLDBASE data into MATLAB and moved away from the VAX into the future, for us on PCs which ran MATLAB (but sadly, not on Macs at that time). With my students in my Satellite Oceanography class I rewrote the Miami DSP CZCS image processing program in MATLAB. The image processing that had taken hours at the VIS Lab ran in 5 minutes on our PCs.

For years I taught MS-263 'Application of Computers in Oceanography'. Early on it was with the Wang, later with HPL, FORTRAN, then MATLAB. At a MATLAB conference in San Jose, I lamented that no basic programming text with MATLAB was in print. A publications representative casually said why don't you write one. I did. It was rejected. I persisted and then self published; selling my CD book on Amazon. 'Introduction to Programming with MATLAB for Scientists and Engineers' went viral. It sold several hundred copies.

It is now 41 years after I met Dennis on the Gyre. Mark Yarbrough and Mike Feinstein continue to operate Dennis' Marine Optical Buoy MOBY in Hawaii, Stephanie Flora performs the complex data processing from the autonomous buoy in MATLAB on her Mac. She posts the data on the Internet for use by the world-wide remote sensing community to atmospherically correct worldwide ocean color imagery from a series of satellites that evolved from CZCS. The data are stored in an advanced MLDBASE format, but our programs can still read those data taken on the Gyre and my hundreds of CTD profiles and current meter records. Though I retired 12 years ago, I still use MATLAB (on a MacBook). Not long ago I dusted off our CZCS programs and ran the MATLAB version I had named PSD (DSP spelled backwards, or with tongue in cheek, perhaps meaning 'Pretty Simple Dennis'). It ran in 10 seconds.

Look what has happened since the heady days of the Wang 720C. My simple benchmark program tells the tale of increasing speed of computers I have used over the years. Sadly, I didn't run the benchmark on the Wang which may have been faster than on the HP-9825.

| COMPUTER | YEAR | RUN TIME (sec) | LANGUAGE |

| Cray 1 | 1976 | 0.01 | FORTRAN (Los Alamos) |

| HP-9825 | 1977 | 1 | HPL |

| VAX 11/780 | 1979 | 1.5 | Unix/FORTRAN (NOAA MD) |

| HP-85A | 1979 | 80 | BASIC |

| HP-9826 | 1985 | 9 | HPL |

| Micro VAX II | 1985 | 0.31 | Unix/FORTRAN |

| Toshiba 3100 PC | 1986 | 0.3 | MATLAB |

| Toshiba 4400 PC | 1992 | 0.04 | MATLAB |

| VAX 4000 | 1992 | 0.19 | Unix/FORTRAN |

| Toshiba 4800 PC | 1994 | 0.012 | MATLAB |

| MacBook | 2009 | 0.0000073 | MATLAB |

I am now long departed from the computer scene at MLML, where the Internet and laptops reign supreme. Now everyone owns a super computer or two, not only on the desktop, but as smart phones in their pocket. I don't know what computers large or small that MLMLers now use. The only thing I may recognize at MLML today are in the hallways. When the architects consulted me about distributing Ethernet cables throughout the building I suggested cable trays along the hallways. Look up and you may see them now.

Epiloque from Jeff Arlt (iTech MLML):

As of May, 2016 those Ethernet cables continue to carry 1s and 0s up and down the hallways of MLML. The ‘core’ of the network has continued to evolve and is now made of glass, fiber-optic cables, that connect the Cisco network switches in closets in each of the building wings back to the server room at 1 gigabit per second.

When MLML moved back to Moss Landing in 2000 the MicroVAX II was replaced by Sun Ultra Sparc servers that hosted the MLML web server and other servers supporting our connection to the Internet and communication with the World Wide Web. The computing environment continued to evolve over the years utilizing: Apple Xserve servers, HP dl360 servers to our present Virtual Computing environment based on a state of the art SpringPath Hyper-Converged computing environment.

The network has evolved so that faculty, researchers, students, staff, and visitors can connect to the MLML campus network using any of their wireless or wired devices while travelling over the 1Gb connection to the CENIC CalREN, California Research and Education Network. Data, and beyond. Voice and video now run through those data cables that Dr. B. so ingeniously hid while also making them easy to access.

We are streaming our weekly seminars on our You Tube Channel:

Connect with MLML using: